To keep your website competitive and visible in search engine results in 2024, a robust technical SEO strategy is essential. Here’s a comprehensive SEO checklist to ensure your website is technically optimized:

1. Ensure Your Site Can Be Crawled by Search Engines

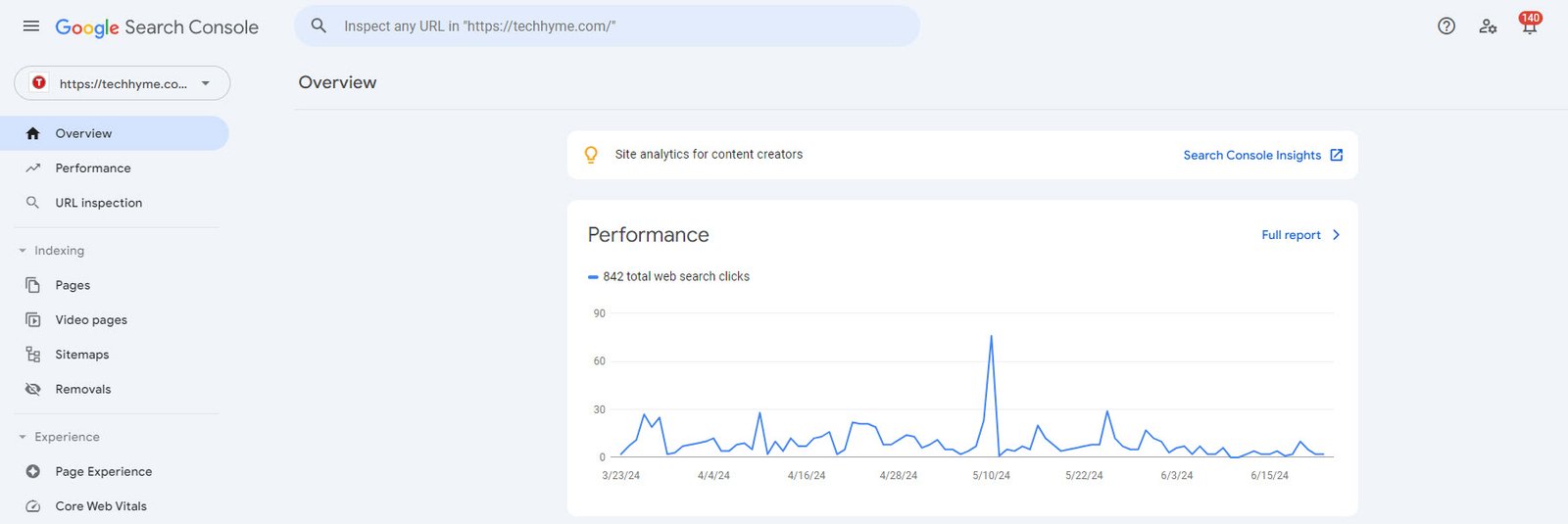

Search engines need to crawl your website to index your content. Use tools like Google Search Console to check crawlability and ensure there are no barriers preventing bots from accessing your site.

2. Use Robots.txt to Manage Which Pages Are Crawled

The robots.txt file guides search engine bots on which pages to crawl and which to ignore. Ensure this file is correctly configured to avoid accidentally blocking important pages from being indexed.

This file, placed in the root directory of your website, instructs web crawlers about which pages or sections should not be crawled or indexed.

A `robots.txt` file consists of directives to allow or disallow access to certain parts of your site. The basic syntax includes `User-agent`, `Disallow`, and `Allow` directives.

Example 1 – Block all web crawlers from accessing the entire site

User-agent: *

Disallow: /Example 2 – Allow all web crawlers to access the entire site

User-agent: *

Disallow:Example 3 – Block a specific crawler (e.g., Googlebot) from accessing a directory

User-agent: Googlebot

Disallow: /private-directory/Example 4 – Block all crawlers from accessing specific files

User-agent: *

Disallow: /secret.html

Disallow: /images/private.jpgExample 5 – Allow a specific crawler to access everything

User-agent: Bingbot

Disallow:Example 6 – Block all crawlers from accessing the /temp/ directory, but allow access to one specific file

User-agent: *

Disallow: /temp/

Allow: /temp/important-file.htmlExample 7 – Prevent Crawling of Duplicate Content

User-agent: *

Disallow: /duplicate-content/Example 8 – Block Access to Admin Pages

User-agent: *

Disallow: /admin/Example 9 – Prevent Crawling of Search Results Pages

User-agent: *

Disallow: /search/3. Check That Important Pages Are Indexed

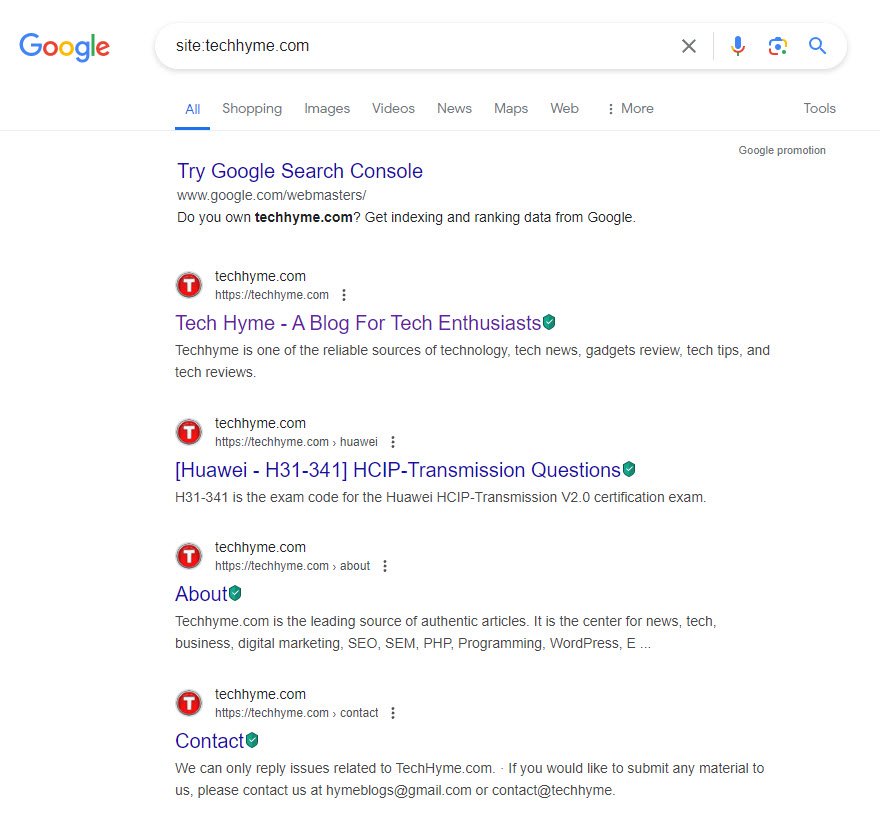

Verify that your key pages are indexed by search engines. You can do this by using the site:yourdomain.com search operator on Google to see which pages are listed.

4. Optimize Images and Enable Compression

Large image files can slow down your site. Optimize images by compressing them without losing quality, and use next-gen formats like WebP. Tools like TinyPNG or ImageOptim can help.

5. Use a Content Delivery Network (CDN)

A CDN distributes your website’s content across multiple servers worldwide, reducing load times by serving content from the nearest server to the user. This improves site speed and user experience.

6. Minimize JavaScript and CSS Files

Reduce the size and number of JavaScript and CSS files to improve loading times. Tools like UglifyJS and CSSNano can help minimize these files without affecting functionality.

7. Use Google’s Mobile-Friendly Test Tool

With the majority of users accessing websites via mobile devices, it’s crucial to ensure your site is mobile-friendly. Google’s Mobile-Friendly Test tool can help you identify and fix mobile usability issues.

8. Secure Your Site with an SSL Certificate

SSL certificates encrypt data between the user’s browser and your server, ensuring secure communication. Websites with SSL certificates also benefit from a ranking boost in search engines.

9. Redirect HTTP to HTTPS

After securing your site with an SSL certificate, ensure that all HTTP traffic is redirected to the HTTPS version. This can be done via server configurations or through your CMS.

Example 1 – Redirect All Web Traffic

RewriteEngine On

RewriteCond %{SERVER_PORT} 80

RewriteRule ^(.*)$ https://www.yourdomain.com/$1 [R,L]Example 2 – Redirect Only a Specific Domain

RewriteEngine On

RewriteCond %{HTTP_HOST} ^yourdomain\.com [NC]

RewriteCond %{SERVER_PORT} 80

RewriteRule ^(.*)$ https://www.yourdomain.com/$1 [R,L]Example 3 – Redirect Only a Specific Folder

RewriteEngine On

RewriteCond %{SERVER_PORT} 80

RewriteCond %{REQUEST_URI} folder

RewriteRule ^(.*)$ https://www.yourdomain.com/folder/$1 [R,L]10. Use Canonical Tags to Prevent Duplicate Content Issues

Canonical tags indicate the preferred version of a webpage to prevent duplicate content issues. This helps search engines understand which version of a page to index and rank.

11. Create and Submit an XML Sitemap

An XML sitemap helps search engines understand your website structure and discover new content. Create a comprehensive sitemap and submit it to search engines via Google Search Console and Bing Webmaster Tools.

12. Ensure It Includes All Important Pages and Is Updated Regularly

Regularly update your XML sitemap to include new and important pages. Automated tools and plugins can help keep your sitemap current.

13. Use Clean and Descriptive URLs

Descriptive and clean URLs improve user experience and search engine understanding. Avoid using long, complicated URLs; instead, use concise and keyword-rich URLs.

14. Implement Structured Data Using Schema.org

Structured data helps search engines understand your content and can enhance search results with rich snippets. Implement relevant schema markup to improve visibility and click-through rates.

15. Validate Markup with Google’s Rich Results Test

After adding structured data, validate it using Google’s Rich Results Test to ensure it’s correctly implemented and eligible for rich results in search.

16. Use a Logical Internal Linking Structure

A well-planned internal linking structure helps distribute link equity across your site and improves crawlability. Ensure your internal links follow a logical structure and guide users effectively.

17. Use 301 Redirects and Canonical Tags

301 redirects inform search engines about permanent page moves, preserving link equity. Canonical tags prevent duplicate content by specifying the preferred version of a page.

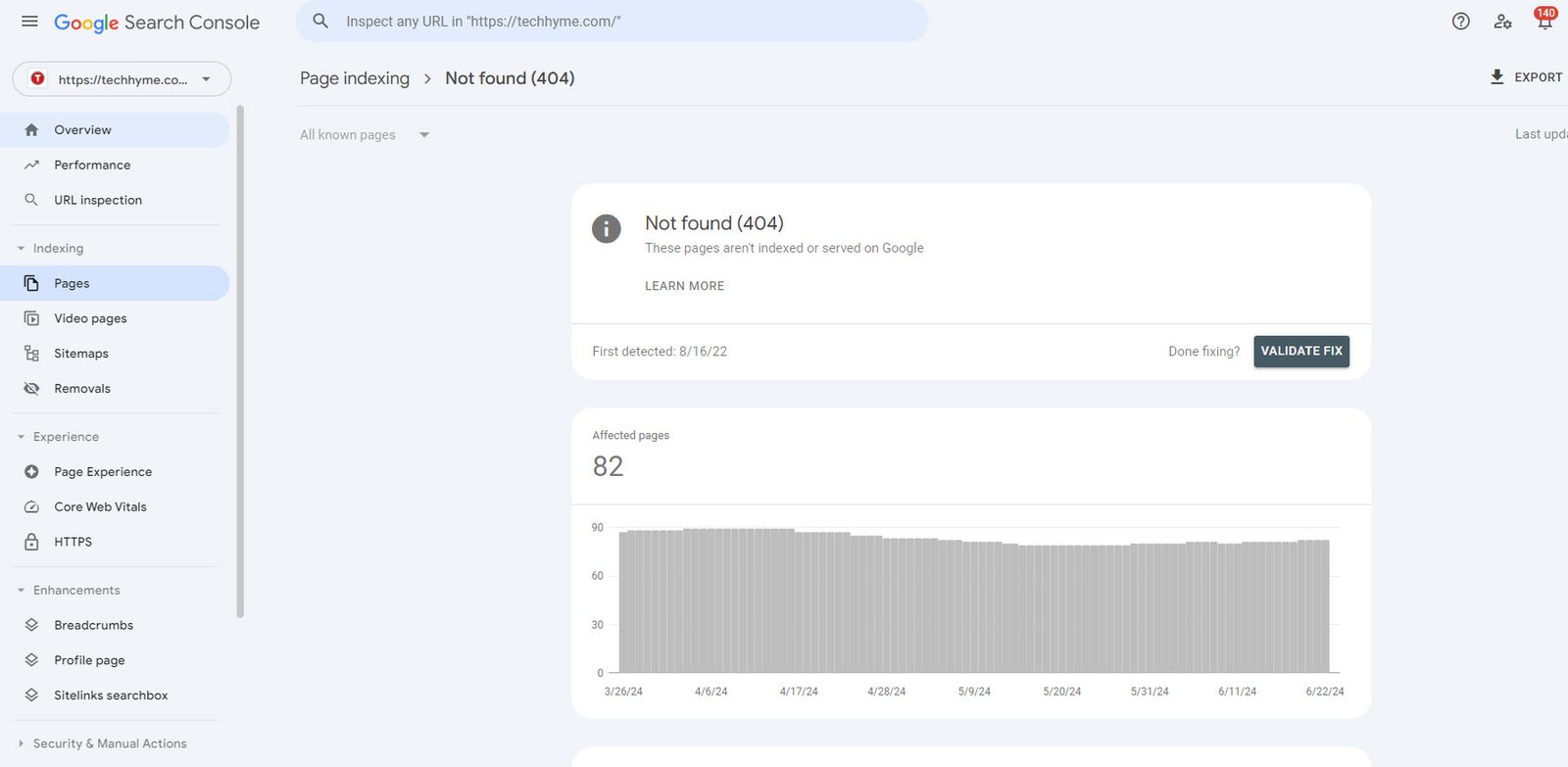

18. Monitor 404 Errors and Fix Broken Links

Regularly check for and fix 404 errors and broken links. Use tools like Google Search Console or third-party crawlers to identify and rectify these issues.

19. Use Hreflang Tags for Multilingual and Multinational Sites

For websites targeting multiple languages or countries, hreflang tags indicate the language and regional targeting of a page. This helps search engines serve the correct version of a page to users.

Additional Tip: Regularly Audit Your Site

Regular technical SEO audits help you stay on top of potential issues and opportunities. Use tools like Screaming Frog, SEMrush, or Ahrefs to perform comprehensive audits and maintain your site’s health.

By following this checklist, you can ensure that your website remains technically sound, optimized for search engines, and user-friendly, giving you a competitive edge in 2024 and beyond.

Pingback: What to Look for When Selecting an SEO Partner - Tech Hyme

Pingback: Top 100 Popular WordPress Plugins You Should Know in 2025 - Tech Hyme