Machine learning has rapidly become a cornerstone of modern technological advancements, enabling computers to learn from data and make intelligent decisions. One of the key components of machine learning is the use of algorithms that drive the learning process.

In this article, we will explore some popular machine learning algorithms that power various applications and domains.

1. K-Nearest Neighbors (KNN) (Supervised)

K-Nearest Neighbors is a simple yet effective algorithm used for both classification and regression tasks. It works on the principle of finding the ‘k’ nearest data points to a given input and making predictions based on their majority class or average value. KNN is non-parametric and particularly useful when the data exhibits local patterns or clusters.

2. Naive Bayes (Supervised)

Naive Bayes is a probabilistic algorithm based on Bayes’ theorem with the “naive” assumption of independence among features. Despite its simplistic assumptions, Naive Bayes often performs surprisingly well for text classification and spam filtering tasks. It’s computationally efficient and requires relatively fewer training data compared to more complex algorithms.

3. Decision Trees / Random Forests (Supervised)

Decision Trees are tree-like structures where each internal node represents a decision based on a specific feature, leading to different branches or leaves representing the final outcomes. Random Forests are an ensemble technique that combines multiple decision trees to achieve better generalization and reduce overfitting.

4. Support Vector Machines (SVM) (Supervised)

SVM is a powerful algorithm for classification and regression tasks. It works by finding the optimal hyperplane that best separates data points of different classes in the feature space. SVM can handle high-dimensional data and works well in both linearly separable and non-linearly separable cases, thanks to kernel trick extensions.

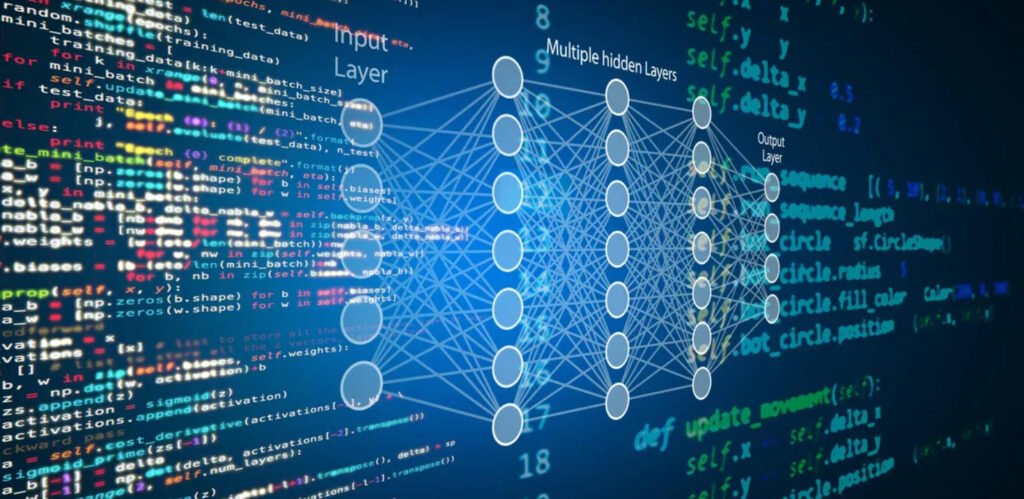

5. Neural Networks (Supervised)

Neural Networks are the backbone of deep learning, designed to mimic the human brain’s structure and functioning. These networks consist of interconnected nodes or neurons arranged in layers, including input, hidden, and output layers. Neural Networks are highly capable of learning complex patterns and have revolutionized various fields, such as image recognition, natural language processing, and game playing.

6. Hidden Markov Models (HMM) (Supervised/Unsupervised)

Hidden Markov Models are probabilistic models commonly used in sequential data analysis, such as speech recognition, bioinformatics, and handwriting recognition. HMMs involve two layers – the observed layer (visible states) and the hidden layer (hidden states) – connected through probabilities, making them suitable for modeling systems with unobservable variables.

7. Clustering (Unsupervised)

Clustering is an unsupervised learning technique used to group similar data points together based on their similarity or distance in the feature space. K-Means is one of the popular clustering algorithms, which iteratively partitions data into ‘k’ clusters. Clustering finds application in customer segmentation, image segmentation, anomaly detection, and more.

8. Feature Selection (Unsupervised)

Feature Selection is a critical step in machine learning that involves identifying and selecting the most relevant features from a large set of input features. The objective is to improve model performance, reduce complexity, and speed up training. Techniques like Recursive Feature Elimination and feature importance from tree-based algorithms aid in feature selection.

9. Feature Transformation (Unsupervised)

Feature Transformation involves converting data into a more suitable representation to enhance the learning process or make it more interpretable. Principal Component Analysis (PCA) is a popular technique for reducing dimensionality while preserving the most relevant information. Feature scaling, such as normalization or standardization, is also a common transformation to ensure features are on the same scale.

10. Bagging (Meta-heuristic)

Bagging, short for Bootstrap Aggregating, is an ensemble learning technique that combines multiple models to improve accuracy and reduce variance. It involves training several instances of the same model on different random subsets of the data and then averaging their predictions for classification tasks (voting) or taking their average for regression tasks.

In conclusion, machine learning algorithms play a crucial role in solving complex problems and making data-driven decisions. Understanding the strengths and weaknesses of these algorithms is essential for selecting the most appropriate one for a given task. As the field of machine learning continues to evolve, new algorithms and variations of existing ones are constantly being developed, pushing the boundaries of what is possible in the realm of artificial intelligence.

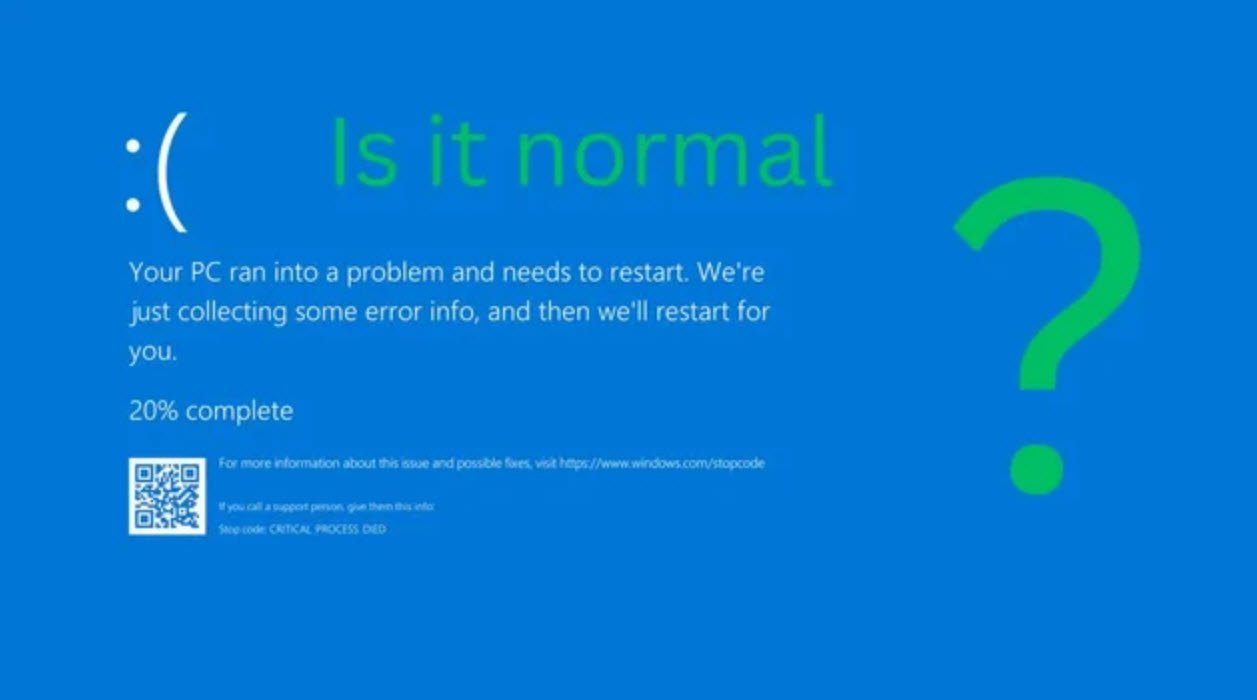

You may also like:- How To Fix the Crowdstrike/BSOD Issue in Microsoft Windows

- MICROSOFT is Down Worldwide – Read Full Story

- Windows Showing Blue Screen Of Death Error? Here’s How You Can Fix It

- A Guide to SQL Operations: Selecting, Inserting, Updating, Deleting, Grouping, Ordering, Joining, and Using UNION

- Top 10 Most Common Software Vulnerabilities

- Essential Log Types for Effective SIEM Deployment

- How to Fix the VMware Workstation Error: “Unable to open kernel device ‘.\VMCIDev\VMX'”

- Top 3 Process Monitoring Tools for Malware Analysis

- CVE-2024-6387 – Critical OpenSSH Unauthenticated RCE Flaw ‘regreSSHion’ Exposes Millions of Linux Systems

- 22 Most Widely Used Testing Tools